- Published on

Node js deep dive - part two

- Authors

- Name

- Mahmoud Amr

- @

Introduction:

Welcome to the second part of Node js deep dive! In this installment, we delve deeper into the core mechanics that make Node.js a powerful and efficient runtime for building applications. Let's explore how Node.js leverages a single-threaded, non-blocking I/O model to achieve remarkable performance. We'll uncover the role of Libuv, a critical component of Node.js's architecture, in enabling this behavior.

Photo by Kris-Mikael Krister on Unsplash

As a quick refresher, in Part One of the series, we explored the intriguing question: How can Node.js, a single-threaded environment, present itself as non-blocking?

This is in contrast to today's more common concurrency model, in which OS threads are employed. Thread-based networking is relatively inefficient and very difficult to use. Furthermore, users of Node.js are free from worries of dead-locking the process, since there are no locks. Almost no function in Node.js directly performs I/O, so the process never blocks. Because nothing blocks, scalable systems are very reasonable to develop in Node.js.

before going with how it done let's talk what's mean to be single threaded!

Understanding Single Threaded Execution:

When we label a programming language as single or multi-threaded, we're essentially referring to how it manages the execution of statements. In a single-threaded environment like Node.js, only one statement is executed at a time.

Synchronous (or sync) executing refers to code executing in sequence so the program is executed line by line one line at time each time a function is called the program execution waits until that function returns before continuing to the next line of code

in plain english if you running a restaurant and the restaurant is full of customers but you just have one waiter that take just one order and wait for the food to be cooked to take it to the customer so he can serve the next customer, As you can imagine, this isn't the most efficient way to operate a restaurant.

back to the quotation above how node.js never blocks the process or in english how it can serve one more customer

When we run a program on our computer we start something called process, with a single process we can have multiple threads the thread is unit or some number of instructions that are waiting to be executed by the cpu. When we start use node in our computer it’s means there is a node process running in that computer and inside that process node run one single thread which means its has only one call stack that used to execute the program which means that only one thing will happens at a time its a synchronous you have to do one thing and everything else will freeze until this thing is done.

and here were Libuv come to solve this problem, we talked about Libuv in the prev article as on of the core dependencies that nodejs rely on. so what is Libuv.

Libuv

In essence, Libuv is a C library designed around event-driven asynchronous I/O. It provides Node.js with a bridge to the underlying computer operating system. This bridge is the reason why Node.js applications can run so swiftly, as there are virtually no roadblocks in the process. However, you might wonder how this is possible.

Key Features and Mechanisms:

libuv is a multi-platform support library with a focus on asynchronous I/O. It was primarily developed for use by Node.js, but it’s also used by Luvit, Julia, uvloop, and others.

Libuv offers a range of features and mechanisms that contribute to Node.js's exceptional performance:

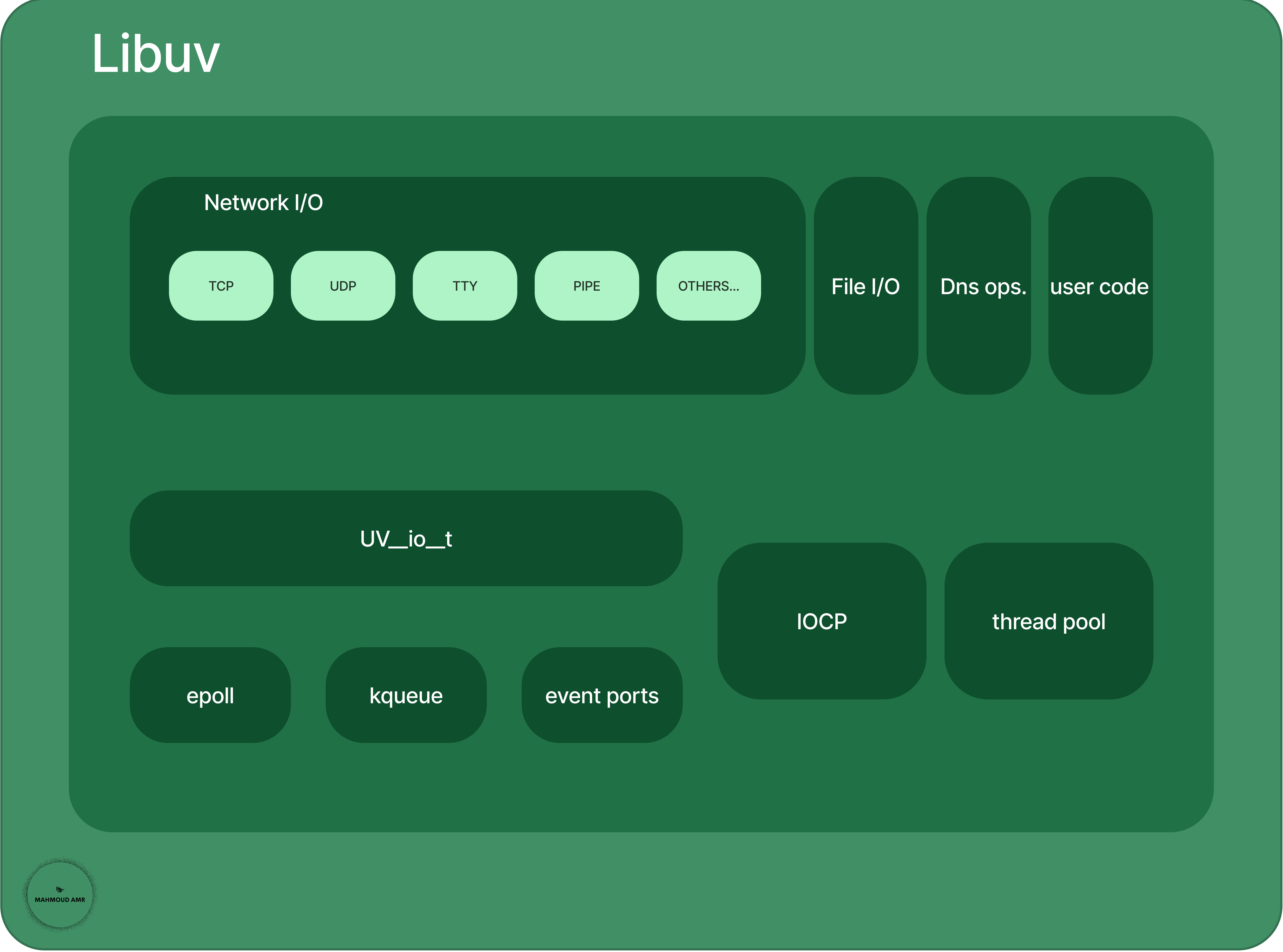

Network I/O: This category includes operations related to network communication, such as TCP, UDP, TTY, and pipes.

File I/O: This category includes operations related to file input/output, such as reading and writing files.

DNS Operations: This category includes operations related to resolving domain names into IP addresses.

User Code: This category includes the code written by the user that will be executed in response to I/O events.

uv_io_t: This is a libuv structure that represents a file descriptor or socket.

epoll, kqueue, event ports, and IOCP: These are operating system-specific mechanisms used by libuv to monitor I/O events.

Thread Pool: Libuv maintains a thread pool to offload blocking operations from the event loop, allowing it to remain responsive.

Features:

Full-featured event loop backed by epoll, kqueue, IOCP, event ports.

TCP sockets (net module in nodejs)

UDP sockets (dgram module in nodejs)

Asynchronous DNS resolution (dns module in nodejs)

Asynchronous file and file system operations (fs module in nodejs)

File system events

ANSI escape code controlled TTY

IPC with socket sharing, using Unix domain sockets or named pipes (Windows)

Child processes (child_process in nodejs)

Thread pool (worker_threads module in nodejs)

Signal handling

High resolution clock

Threading and synchronization primitives

To explain, Libuv furnishes users with three essential abstractions.

Handles: Monitoring and Operating on Resources

Handles, in the context of Libuv, are like vigilant sentinels assigned to monitor or operate on various resources within the system. These resources can range from network sockets and files to timers and pipes. Each handle is associated with a particular type of resource, and it's the role of these handles to keep an eye on events related to these resources.

When a handle is initialized, it's equipped with a callback function. This callback function serves as the handler for any I/O events that occur on the associated resource. For instance, if you're dealing with a network socket, the handle's callback will be triggered when data arrives on that socket. This mechanism ensures that the application can respond promptly to events without resorting to blocking operations.

Handles encapsulate the necessary information about a resource, including its state and the associated event loop. The event loop manages the monitoring of handles and directs the flow of events to the appropriate callback functions. This approach allows multiple resources to be monitored concurrently without blocking the application's execution.

Requests: Initiating I/O Operations

Requests are another integral component of Libuv's architecture. While handles focus on monitoring and reacting to events, requests are the initiators of I/O operations. When you need to perform actions like reading data from a file or writing data to a socket, you create a request associated with the relevant handle for that operation.

Requests encapsulate the details required to perform an I/O operation, such as the data to be read or written, the target resource, and the intended operation. Once initialized, the request is passed to the appropriate handle, which then orchestrates the actual execution of the operation. During this process, the associated callback function is responsible for handling the completion of the operation and any associated events.

By decoupling the initiation of I/O operations from their execution and completion, requests ensure that the application remains responsive and can efficiently manage multiple concurrent tasks. This architecture enables non-blocking behavior even when performing complex I/O operations.

Event Loop:

The event loop is like the maestro guiding an orchestra, ensuring that every instrument (handle) plays its part at the right time. It's the central component responsible for managing all the concurrent operations in Node.js. It is a semi-infinite loop. It keeps running until there is still some work to be done (e.g. unexecuted code, active handlers, or requests), and when there is no more work the loop exists (process.exit).

Both handles and requests are essential participants in Libuv's event-driven architecture. The event loop serves as the conductor of this symphony, directing the flow of events and ensuring that callbacks are invoked at the appropriate times. As events occur on monitored resources, the event loop triggers the corresponding callback functions associated with the relevant handles.

Requests, on the other hand, interact with handles to initiate I/O operations. The event loop coordinates these interactions, ensuring that operations are executed efficiently and without blocking the overall process.

Conclusion:

In this article through Node.js's inner workings, we've unraveled the magic behind its single-threaded, non-blocking architecture. Libuv, with its handles, requests, and the event loop, stands as the cornerstone of this architectural marvel. It empowers Node.js to provide exceptional performance, making it a favorite among developers worldwide. As we conclude our deep dive, we've gained insights into the intricacies that shape Node.js into the powerful

However, there's a whole world to explore beneath the surface, particularly when it comes to the intricate workings of the event loop.

In our next series, we'll dive deeper into the event loop, one of the most fascinating and crucial components of Node.js. We'll unravel its inner workings, demystify its mechanisms, and provide you with a comprehensive understanding of how it manages concurrency, handles callbacks, and keeps Node.js running smoothly.